Running a local Kubernetes cluster is a great way to save money using your own hardware, but how can we safely expose these services to the Internet? If we are using a residential service, we also have to take into account dynamic IP addresses, bad actors, and ISP rules on hosting.

By using a globally-accessible proxy to route traffic to our Kubernetes cluster, we gain the following advantages:

- Routable static IP address: no dynamic assignment or CG-NAT problems

- Network agnostic: no port forwarding or any special router configuration required

- Protect against bad actors by hiding public IPs: block DDoS attacks and port sniffing

- Hide traffic from ISPs: hosting external services (i.e. websites on port 443) might break many providers’ terms of service agreements

- Proxy traffic to multiple upstream servers to balance load, first line of defense to block unwanted traffic

Prerequisites

To get started, we need hardware with a functioning Kubernetes cluster:

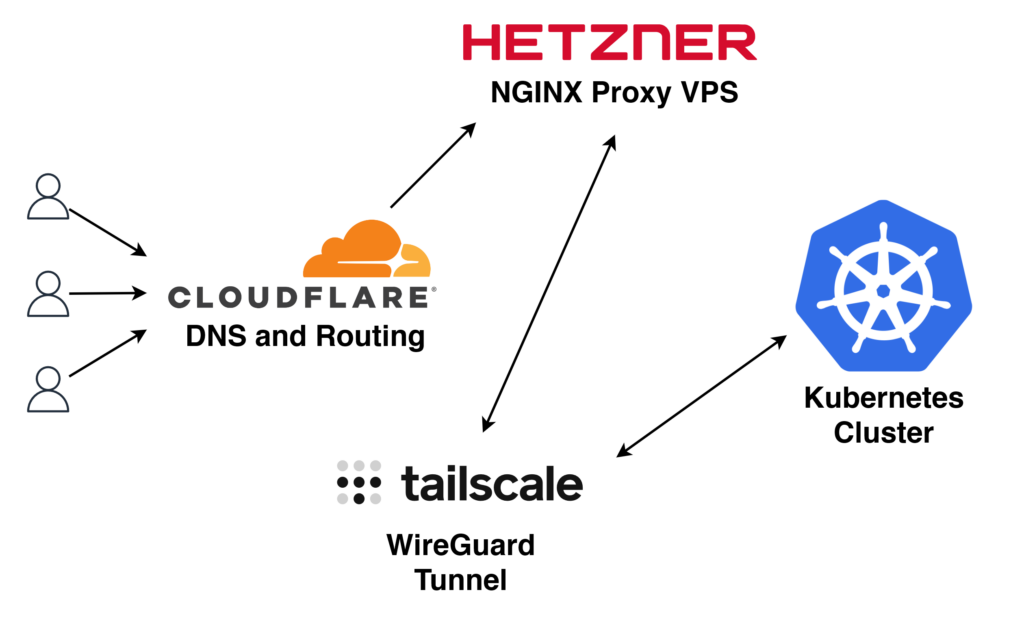

Architecture Overview

Our design will use three different systems to route and proxy traffic:

- Cloudflare – DNS and routing

- Hetzner – virtual private server for static IP and proxy

- Tailscale – the glue that connects our VPS and Kubernetes cluster

Setup

VPS Server

For our proxy, a Hetzner CPX11 VPS is used to act as our cloud ingress. Hetzner is the best provider for this use-case:

- Incredibly cheap, the CPX11 starts at around $5/month

- Includes 20TB of outbound traffic per month

- 10Gbps bandwidth (shared)

- Powerful AMD chips, proxying 3Gbps barely cracks 50% CPU usage

Ultimately, make sure that the provider chosen has a point of presence close to your physical location. Hetzner Cloud has locations in Eastern and Western USA, as well as throughout Europe.

WireGuard Link

To link our global proxy and private Kubernetes cluster, we will use a WireGuard VPN connection between the two hosts. Instead of manually setting up WireGuard, we can use Tailscale to do all the heavy lifting for us. Tailscale also comes with many added benefits, such as NAT hole-punching (to bypass CG-NAT) and ACLs.

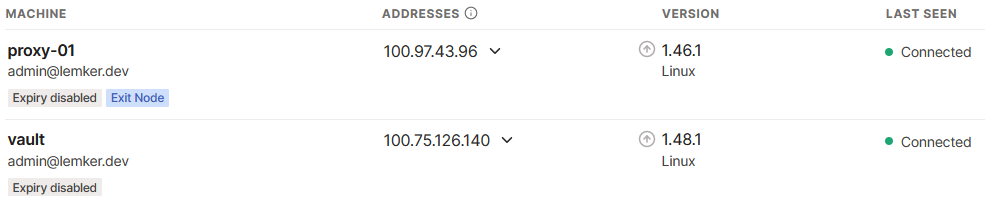

In this example, our NGINX proxy is called proxy-01, and our host is called vault. Install Tailscale on both machines, and set their expiry to Disabled. Optionally, set the proxy as an Exit Node using the following command:

tailscale up --advertise-exit-nodeNow both our nodes should be shown in the Tailscale web UI:

NGINX Proxy

NGINX was chosen as the proxy software, as HAProxy does not support UDP load balancing.

To proxy our traffic, we will use the magic of the NGINX stream module. This method only proxies and load balances TCP/UDP packets and not HTTP frames; even a very small VPS can be used to process packets at near line-speed. SSL termination is handled in Kubernetes.

To setup our NGINX server to act as a TCP/UDP reverse proxy, simply add the following to nginx.conf:

stream {

server {

listen 443;

listen 443 udp;

proxy_pass vault:443;

proxy_protocol on;

}

}Using proxy_pass, we instruct NGINX to forward all traffic on TCP/UDP port 443 to our upstream Kubernetes cluster through the Tailscale network. DNS names are handled by Tailscale as well.

The setting proxy_protocol is used to properly set HTTP headers such as X-Forwarded-For. Without this, our upstream servers will not understand that these connections are proxied and receive incorrect source IP addresses.

Kubernetes Ingress

To allow the proxy_pass protocol into our Kubernetes cluster, we have to add it to our ingress-nginx Helm chart as a ConfigMap. The K3s Helm module will automatically patch the deployment with our new values:

kind: ConfigMap

apiVersion: v1

metadata:

name: ingress-nginx-controller

namespace: ingress-nginx

data:

use-forwarded-headers: "false"

use-proxy-protocol: "true"

use-http2: "true"Now our Kubernetes ingress controller will use the correct source IP address as set by the NGINX downstream load balancer. As we are using a private VPN to link the two machines together, no checks were added to verify the authenticity of the reverse proxy. If the Kubernetes cluster were to be public, proxy connections should be restricted to only allow trusted downstream server IPs.

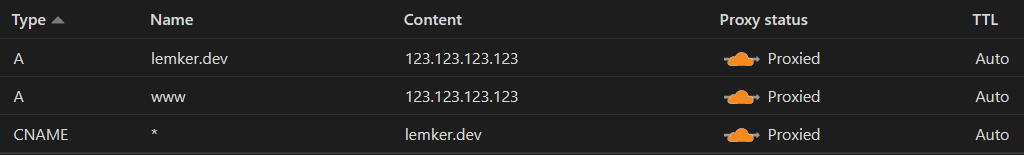

Cloudflare DNS

Cloudflare is used as our DNS controller, to route domain names to our public VPS IP address. A wildcard CNAME record is used to route all traffic. Replace 123.123.123.123 with the VPS IP address:

Testing

Lastly, let’s test to make sure that our traffic is routed correctly. We should see that Kubernetes pods are receiving the correct source IP address of connecting clients. For our test pod, setup a whoami container with NGINX ingress:

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami

namespace: lemker-dev

labels:

app: whoami

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- image: docker.io/containous/whoami:v1.5.0

name: whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: whoami

namespace: lemker-dev

spec:

ports:

- name: whoami

port: 80

targetPort: 80

selector:

app: whoami

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: whoami

namespace: lemker-dev

annotations:

kubernetes.io/ingress.class: nginx

spec:

tls:

- hosts:

- "*.lemker.dev"

secretName: wildcard-secret-lemker-dev

rules:

- host: whoami.lemker.dev

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: whoami

port:

number: 80Navigating to whoami.lemker.dev, we can see that the pod receives all the correct proxy information and source IP from X-Original-Forwarded-For:

Hostname: whoami-77759477c6-n9r6m

IP: 127.0.0.1

IP: ::1

IP: 10.42.0.239

IP: fe80::d4cf:d8ff:fe93:15bb

RemoteAddr: 10.42.0.222:47274

GET / HTTP/1.1

Host: whoami.lemker.dev

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:109.0) Gecko/20100101 Firefox/118.0

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8

Accept-Encoding: gzip, br

Accept-Language: en-US,en;q=0.5

Cdn-Loop: cloudflare

Cf-Connecting-Ip: REDACTED

Cf-Ipcountry: CA

Cf-Ray: REDACTED

Cf-Visitor: {"scheme":"https"}

Sec-Fetch-Dest: document

Sec-Fetch-Mode: navigate

Sec-Fetch-Site: none

Sec-Fetch-User: ?1

Upgrade-Insecure-Requests: 1

X-Forwarded-For: REDACTED

X-Forwarded-Host: whoami.lemker.dev

X-Forwarded-Port: 443

X-Forwarded-Proto: https

X-Forwarded-Scheme: https

X-Original-Forwarded-For: REDACTED

X-Real-Ip: REDACTED

X-Request-Id: REDACTED

X-Scheme: https

Leave a Reply