As with everyone starting out with containerizing every app possible, Docker Compose is frequently used when setting up a structured deployment with multiple components. I have used Compose for a few years now (after migrating from docker run commands), however, with my day-to-day work in Kubernetes it made no sense to continue learning and developing with an inferior tool.

For a single-node setup, we can use a Kubernetes distribution called K3s to greatly simplify the setup and management of a K8s cluster. Kubernetes K3s offers many advantages over Docker Compose:

- K3s is bundled with the

containerdengine, there is no need to install a separate runtime - Choose any ingress system

- Deployments using Helm are automatically managed with K3s Helm manager

- Complex stacks can be deployed using Kubernetes operators with few lines

- Deployment strategy can be easily set to Recreate, Rolling, etc. depending on your needs

- Learn how to create production ready deployments (Compose is never used in production environments, from my experience at least)

Deploying the Stack

K3s works great out of the box, but with some additional tools we can achieve much better automation and functionality. In this example, the K3s stack consists of the following components:

- Kubernetes K3s – the main Kubernetes distribution

- Cloudflare – DNS management and certificate challenge with DNS-01

- Cert Manager – automatically create and renew SSL certificates

- Reflector – replicate certificates (secrets) in multiple namespaces

- Ingress-NGINX – cluster ingress manager

- Kubernetes Dashboard – observe Kubernetes deployments and assets

Kubernetes K3s

Before getting started, make sure to disable swap on the node. Even though it is not part of the K3s installation guide, it is standard practice to disable swap for better performance in Kubernetes. Of course, we are only able to do this when enough memory is available on the host.

Disable currently running swap:

swapoff -aPrevent swap from mounting on boot in /etc/fstab:

#/swap.img none swap sw 0 0Now it’s time to install K3s – we will disable traefik since ingress-nginx will be used instead:

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="server --disable traefik" sh -Cert Manager and Reflector

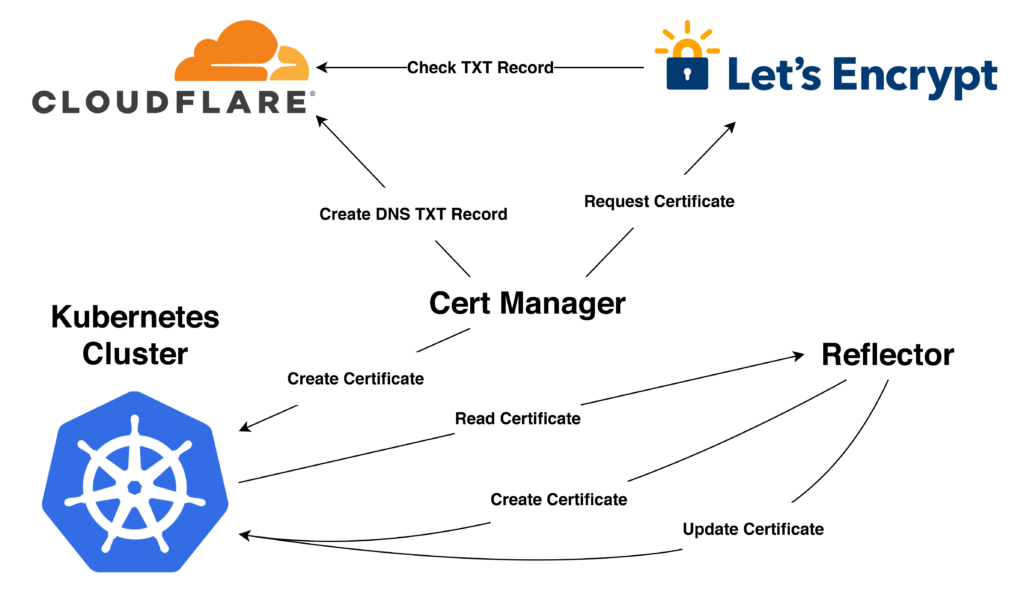

The Kubernetes Cert Manager is an amazing tool that takes care of certificate management end-to-end: it will create certificates using various challenge methods and renew them when needed.

We will install cert-manager as a Helm chart using the K3s Helm manager:

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: cert-manager

namespace: helm-charts

spec:

repo: https://charts.jetstack.io

chart: cert-manager

targetNamespace: cert-manager

version: v1.12.3

set:

installCRDs: "true"Next, setup a secret in Cloudflare with scoped permissions to manage TXT records for your domain. Save the api-token as a secret:

apiVersion: v1

kind: Secret

metadata:

name: cloudflare-api-token-secret

namespace: cert-manager

type: Opaque

stringData:

api-token: <token>Now we can create a ClusterIssuer which will request certificates from Let’s Encrypt, create the required TXT records in Cloudflare DNS, and save certificates as secrets in Kubernetes. By using a dns01 challenge in our solver, we can generate certificates for our domain without external access to the cluster (avoiding standard HTTP challenge):

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt

namespace: cert-manager

spec:

acme:

email: <email>

server: https://acme-v02.api.letsencrypt.org/directory

privateKeySecretRef:

name: issuer-letsencrypt

solvers:

- dns01:

cloudflare:

apiTokenSecretRef:

key: api-token

name: cloudflare-api-token-secret

email: <email>Finally, we can create a wildcard certificate for our domain using Let’s Encrypt. The wildcard certificate can then be easily attached to any ingress in our cluster without having to regenerate a new certificate for every application subdomain:

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: wildcard-secret-lemker-dev

namespace: cert-manager

spec:

secretName: wildcard-secret-lemker-dev

issuerRef:

name: letsencrypt

kind: ClusterIssuer

commonName: "*.lemker.dev"

dnsNames:

- "*.lemker.dev"

secretTemplate:

annotations:

reflector.v1.k8s.emberstack.com/reflection-allowed: "true"

reflector.v1.k8s.emberstack.com/reflection-allowed-namespaces: "kubernetes-dashboard,lemker-dev"

reflector.v1.k8s.emberstack.com/reflection-auto-enabled: "true"

reflector.v1.k8s.emberstack.com/reflection-auto-namespaces: "kubernetes-dashboard,lemker-dev"By default, Kubernetes only allows access to secrets within the same namespace. This becomes a problem when we want to share the wildcard certificate between multiple namespaces. We also cannot generate multiple versions of our wildcard as Let’s Encrypt only allows a certain amount of requests per day.

With Reflector, we can easily replicate certificates (secrets) between namespaces in Kubernetes. When a new or updated certificate is generated in the cert-manager namespace, the secret is created or updated in the kubernetes-dashboard and lemker-dev namespaces.

Ingress-NGINX

Instead of the bundled Traefik ingress mechanism in K3s, we can use a more standard Kubernetes ingress controller like ingress-nginx. Traefik also seems to struggle with forwarding the correct IP addresses of external users, even when using PROXY protocol the incorrect address is passed.

The ingress controller will coordinate where to send traffic based on the request, and upgrade application services to HTTPS with a valid wildcard certificate generated by Cert Manager.

We can deploy the NGINX ingress controller using the K3s Helm manager:

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: ingress-nginx

namespace: helm-charts

spec:

repo: https://kubernetes.github.io/ingress-nginx

chart: ingress-nginx

targetNamespace: ingress-nginx

valuesContent: |-

controller:

service:

externalTrafficPolicy: LocalSetting externalTrafficPolicy: Local ensures that pods will receive the original IP address of the connecting agent, otherwise only the ingress controller IP will be seen by pods.

If using an external load balancer or proxy, enable PROXY protocol support:

kind: ConfigMap

apiVersion: v1

metadata:

name: ingress-nginx-controller

namespace: ingress-nginx

data:

use-forwarded-headers: "false"

use-proxy-protocol: "true"Kubernetes Dashboard

Deploying the dashboard provides a nice visual representation of the health and status of the cluster. For some reason, deploying the dashboard with Helm seems to hang indefinitely, so for now we can deploy straight from the recommended.yaml file:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yamlNext, we can create a ServiceAccount and ClusterRoleBinding for accessing the dashboard:

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboardFinally, to access our Kubernetes dashboard we can create an ingress using the NGINX ingress controller along with the wildcard certificate created previously:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kubernetes-dashboard-ingress

namespace: kubernetes-dashboard

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

tls:

- hosts:

- "*.lemker.dev"

secretName: wildcard-secret-lemker-dev

rules:

- host: kubernetes.lemker.dev

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kubernetes-dashboard

port:

number: 443The ingress annotation backend-protocol: "HTTPS" must be added since the dashboard backend only serves HTTPS. Our dashboard can now be accessed at https://kubernetes.lemker.dev/

Finally, generate a token for the admin user so we can authenticate with the dashboard. The token has a lifetime of one year so the dashboard does not bother us with a new token every time we try to login:

kubectl -n kubernetes-dashboard create token admin-user --duration=8760hDeploying an Application

Now we can deploy our first Kubernetes application! With our previous work, accessing our application should be easy with the automated ingress controller. The deployment consists of three parts:

- Deployment – application deployment strategy and containers

- Service – connecting the application and ingress

- Ingress – external access to our application with SSL termination

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami

namespace: lemker-dev

labels:

app: whoami

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- image: docker.io/containous/whoami:v1.5.0

name: whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: whoami

namespace: lemker-dev

spec:

ports:

- name: whoami

port: 80

targetPort: 80

selector:

app: whoami

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: whoami

namespace: lemker-dev

annotations:

kubernetes.io/ingress.class: nginx

spec:

tls:

- hosts:

- "*.lemker.dev"

secretName: wildcard-secret-lemker-dev

rules:

- host: whoami.lemker.dev

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: whoami

port:

number: 80Now the application whoami can be accessed externally at https://whoami.lemker.dev/

Also verify that the pod is receiving the correct connecting IP address in the X-Original-Forwarded-For header:

Hostname: whoami-77759477c6-n9r6m

IP: 127.0.0.1

IP: ::1

IP: 10.42.0.239

IP: fe80::d4cf:d8ff:fe93:15bb

RemoteAddr: 10.42.0.222:47274

GET / HTTP/1.1

Host: whoami.lemker.dev

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:109.0) Gecko/20100101 Firefox/118.0

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8

Accept-Encoding: gzip, br

Accept-Language: en-US,en;q=0.5

Cdn-Loop: cloudflare

Cf-Connecting-Ip: REDACTED

Cf-Ipcountry: CA

Cf-Ray: REDACTED

Cf-Visitor: {"scheme":"https"}

Sec-Fetch-Dest: document

Sec-Fetch-Mode: navigate

Sec-Fetch-Site: none

Sec-Fetch-User: ?1

Upgrade-Insecure-Requests: 1

X-Forwarded-For: REDACTED

X-Forwarded-Host: whoami.lemker.dev

X-Forwarded-Port: 443

X-Forwarded-Proto: https

X-Forwarded-Scheme: https

X-Original-Forwarded-For: REDACTED

X-Real-Ip: REDACTED

X-Request-Id: REDACTED

X-Scheme: https

Leave a Reply